Planned, Structured, Supervised Student-AI Interaction

Brief Overview

In exploring AI‐enhanced teaching pedagogy, two complementary interaction models emerge. In the Student-Driven Interaction, students take the lead by crafting prompts, critically evaluating AI responses, and improving their questioning. In contrast, the AI-Driven Interaction features a custom AI that initiates guided dialogue, provides adaptive feedback, and scaffolds the student responses according to the predefined objectives. Both approaches encourage active learning, help students think about how they learn, and support deeper engagement with course content, but differ in who is in charge: students drive the inquiry in the first model, while the AI agent guides the learning sequence in the second. By comparing these frameworks, instructors can choose the balance of autonomy and structure that best aligns with their learning outcomes and assessment goals.

Student-Driven Interaction

In a student-driven AI interaction model, each student takes ownership of their interactions with a generative AI platform (ChatGPT, Claude, etc.). Beginning from a shared, structured starting prompt, students craft follow-up questions to probe AI-generated ideas and then critically assess the results. The instructor’s role is to facilitate by articulating the AI tool’s capabilities and limits, clarifying the learning objectives for each exchange, and providing versatile prompt templates and relevant content excerpts. This design emphasizes individual work and active learning, as students demonstrate their analytical skills, metacognitive skills (awareness of their own thinking), and ability to integrate AI-generated content into their reasoning through each purposeful interaction.

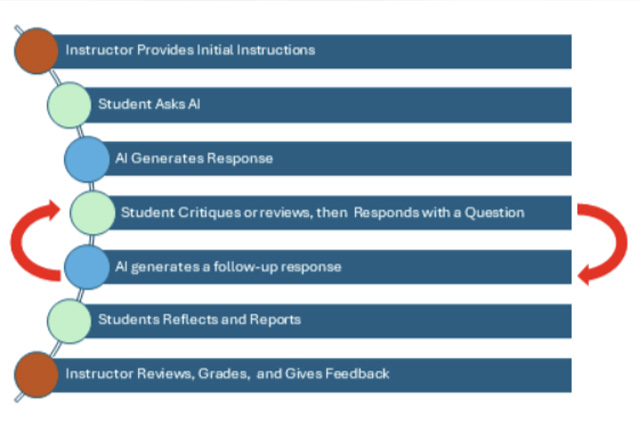

Figure 1 illustrates the process of student-driven AI interaction, in which students continuously engage in four phases: ask, review, reflect, and follow-up. First, the student asks a question or prompts the AI. Next, they review the AI’s response and critique its assumptions, completeness, and alignment with course concepts. Then, students follow up by crafting a new, more targeted prompt or piece of feedback to guide the AI toward deeper or more accurate insights. This cycle repeats until a satisfactory outcome is reached, either when the instructor’s predefined criteria are met or when students themselves judge that their report demonstrates sufficient understanding and justification of the AI-enhanced analysis. In the reflect phase, the student considers what they have learned, identifies any gaps or misconceptions, and determines whether the current output meets the desired learning objective. Finally, students document their overall experience and insights through a reflective report, following the instructor’s guidelines for format and content.

From the instructor’s perspective, preparation centers on:

- Establishing AI Literacy & Usage Policies: Educate students on how AI works, what uses are permitted (e.g., generating examples, clarifying concepts), and what is prohibited (e.g., submitting AI-generated final answers).

- Determining What Students Should Learn: Define target skills and outcomes that students will achieve as they interact with AI-share these with students.

- Developing Prompt Frameworks: Create adaptable prompt stems and select source materials that guide exploration.

- Planning How Students Will Share Learning: Specify how students will document their AI dialogues (e.g., written reflections, concept maps, presentations, recorded video, etc.) and establish transparent rubrics that weigh both AI engagement and independent reasoning.

- Fostering Equity & Rigor: Instructors must ensure that all students have reliable access to AI tools.

In a student-driven AI interaction model, grading goes beyond right or wrong answers to focus on how students engage with AI as a thinking partner. Instructors evaluate how deeply students think: how thoroughly students interrogate AI outputs, identify assumptions, and articulate their own reasoning. They also assess the clarity and coherence of AI-supported analyses, noting how well students combine AI suggestions with course material and what they already know.

AI-Driven Interaction

In an AI-Driven Interaction model, the instructor designs and configures a custom AI (e.g., a custom GPT-based tutor or case-study simulator) that leads students through structured, goal‐oriented learning scenarios. This approach is ideal for simulated problem-solving, case-based analysis, team-dynamics exercises, and targeted practice (e.g., math walkthroughs or module quizzes). AI-driven interactions offer students a dynamic, tailored learning experience that can accelerate skill development and deepen understanding. Students benefit from consistent, on-demand guidance, immediate feedback, and scaffolded challenges aligned with course objectives by engaging with a custom AI agent. This model fosters autonomy and metacognition, as students track their progress and reflect on AI-provided hints and their own reasoning. Because the AI’s behavior is pre-programmed, students receive a consistent, adaptive learning experience that aligns with course objectives. The instructor retains control by defining the AI’s knowledge base, interaction scripts, and feedback logic, ensuring each exchange scaffolds toward the desired critical-thinking or outcome.

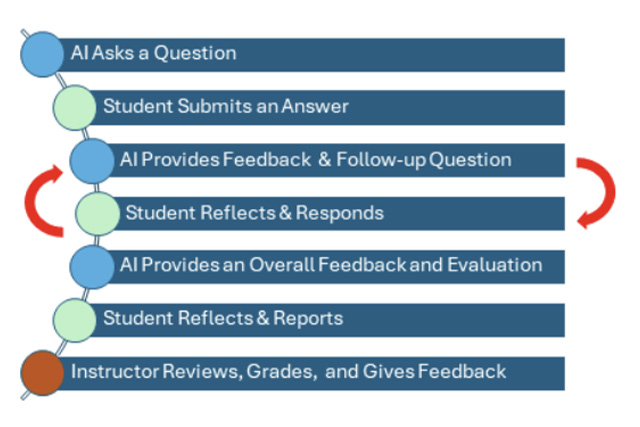

Figure 2 depicts the AI-Driven Interaction process, which begins with a custom AI presenting a prompt or piece of content, such as a case study scenario, problem statement, or guided question, to the student. The student then submits their response, and the AI immediately delivers feedback, critique, hints, or a follow-up question designed to deepen understanding. In the next phase, the student reflects on this AI feedback and crafts a revised response, re-engaging with the AI. This loop of AI prompt → student response → AI feedback → student reflection continues either until goals are met (as set up by the instructor during the design of the activity) or until the student decides they have reached their learning goal. At the end of the session, the AI provides a summative evaluation, drawing on a rubric established by the instructor, to highlight areas of strength and opportunities for further growth. Finally, students document their overall experience and insights through a reflective report, following the instructor’s guidelines for format and content.

Instructor Preparation for AI-Driven Interaction:

- Educate students on AI Literacy & Usage Policies: How AI works, what uses are permitted (e.g., generating examples, clarifying concepts), and what is prohibited (e.g., submitting AI-generated final answers).

- Define and Share Target Skills and Outcomes: Explicitly create, define, and share expected skills and outcomes for AI interactions with students.

- Curate and Prepare Training Materials: Curate & prepare materials that the AI agent will reference.

- Design AI Behavior & Interaction Scripts: Design the structured dialogue flows (e.g., prompts, follow-ups, hints, and challenge questions) that the agent will use to guide learning paths.

- Test & Refine Agents: Take time to try out the AI Agent with others to verify accuracy, clarity, and alignment with objectives.

- Create a Structure for Student Reporting & Evaluation: Create a structure that specifies how students will document their AI dialogues (e.g., written reflections, concept maps, presentations, recorded video, etc.) and establish transparent rubrics that weigh both AI engagement and independent reasoning.

- Develop Evaluation Criteria for the AI to Use with Students: Develop evaluation criteria that the AI can use to provide formative feedback.

- Foster Equity and Maintain Rigor: Instructors must ensure that all students have reliable access to AI tools.

In an AI-Driven Interaction model, grading balances traditional ideas of correctness with evidence of iterative learning and knowledge integration. Instructors can assess the accuracy of students’ final solutions while also evaluating how students scaffold their understanding over the course of their interactions with AI‐guided steps. Rubrics might include criteria for solution correctness, progressive refinement (e.g., how students incorporated AI hints to improve their work), and knowledge transfer (e.g., drawing on prior coursework to critique or extend AI suggestions).

Student-Driven vs AI-Driven Interaction Comparison

Below is a comparison of Student-driven and AI-driven interaction models. It highlights key dimensions, such as suitability for project-based learning, educator responsibilities, grading focus, reporting formats, accessibility considerations, and learning benefits.

Project-Based Learning (PBL) Suitability

Student-Driven Interaction: AI serves as a flexible research assistant across all PBL phases, supporting initial brainstorming and idea generation, enabling critique of AI outputs, and facilitating iterative refinement processes.

AI-Driven Interaction: Custom AI simulates stakeholders and domain experts, generating case details and evolving requirements, assigning team roles dynamically, and providing real-time feedback on deliverables such as business-model canvases and design prototypes.

Educator Role

Student-Driven Approach: Instructors teach AI literacy and usage policies, scaffold initial prompts and supporting content, define learning objectives and reflection criteria, review student AI critiques and reflections, and grade student work while delivering feedback.

AI-Driven Approach: Instructors teach AI literacy and usage policies, define AI agent objectives, including knowledge base and behavior scripts, along with evaluation rubrics, curate and test training materials for AI agents, monitor AI performance while adjusting scripts and mitigating bias, and review and grade student-AI interactions while providing targeted feedback.

Grading Focus

Student-Driven Assessment: Student assessments focus on the depth of reflection and the understanding of how they think, the sophistication of follow-up prompts that demonstrate thoughtful, informed prompting and engagement, the clarity of AI-augmented analyses, and the accuracy of final solutions against expert criteria.

AI-Driven Assessment: Grading emphasizes evidence of step-by-step improvement showing how AI prompting was used and adapted, along with reflective justification linking AI guidance to disciplinary concepts.

Reporting Formats

Student-Driven Documentation: Students produce written journals or annotated AI chat logs, audio memos or concept maps, in-class presentations or video reflections, and exported transcripts with student annotations.

AI-Driven Documentation: Documentation includes exported AI-student chat transcripts, AI-generated summary reports and analytics dashboards, concept maps or infographics, and in-class or video presentations showcasing AI agent interactions that link AI feedback to learning objectives.

UDL & Accessibility

Student-Driven Design and UDL: This approach offers multiple means of engagement through text, audio, and visual scaffolds, with choice in reflection mode supporting diverse students through written, audio, or visual options, while AI provides flexible pathways for students to reach learning goals through alternate explanations in various formats and complexity levels.

AI-Driven Accessibility: The AI-driven model incorporates built-in accessibility features, including screen-reader compatibility and adjustable text complexity, alongside choice in reflection modes that support diverse students.

Instructional Challenges

Student-Driven Model: Primary concerns include ensuring equitable AI access for all students, preventing over-reliance on AI shortcuts, and designing rubrics that value independent insight alongside AI use.

AI-Driven Model: Key challenges encompass ensuring equitable AI access and infrastructure, managing the complexity of building and maintaining custom AI agents, mitigating AI bias and misinformation, and balancing AI autonomy with necessary human oversight.

How AI Promotes Learning

Student-Driven Learning Benefits: This approach promotes autonomy and ownership as students craft and refine their own inquiries, building agency. It fosters metacognitive growth through reflection on AI outputs, deepening understanding of thinking processes, while developing transferable inquiry skills such as prompt engineering and critical evaluation, that can be generalized across contexts.

AI-Driven Learning Benefits: The AI-driven model provides guided scaffolding through just-in-time hints, keeping students in their proximal development zone. It offers personalized pacing with adaptive difficulty that tailors challenges to each student's needs, while ensuring contextual relevance through simulations that mirror authentic, discipline-specific problems, enhancing applicability.

Enas Aref is a former AI Graduate Fellow with the WMU Office of Faculty Development and a doctoral instructor in the Industrial & Entrepreneurial Engineering & Engineering Management Department at WMU, and current Assistant Teaching Professor of Management & Technology in the College of Engineering and Innovation at Bowling Green State University. Research interests include Ergonomics and Human Factors, STEM Education, Artificial Intelligence, User Experience (UX), and Engineering Management.